3D Visual Grounding with Transformers

Project for Advanced Deep Learning for Computer Vision

Abstract

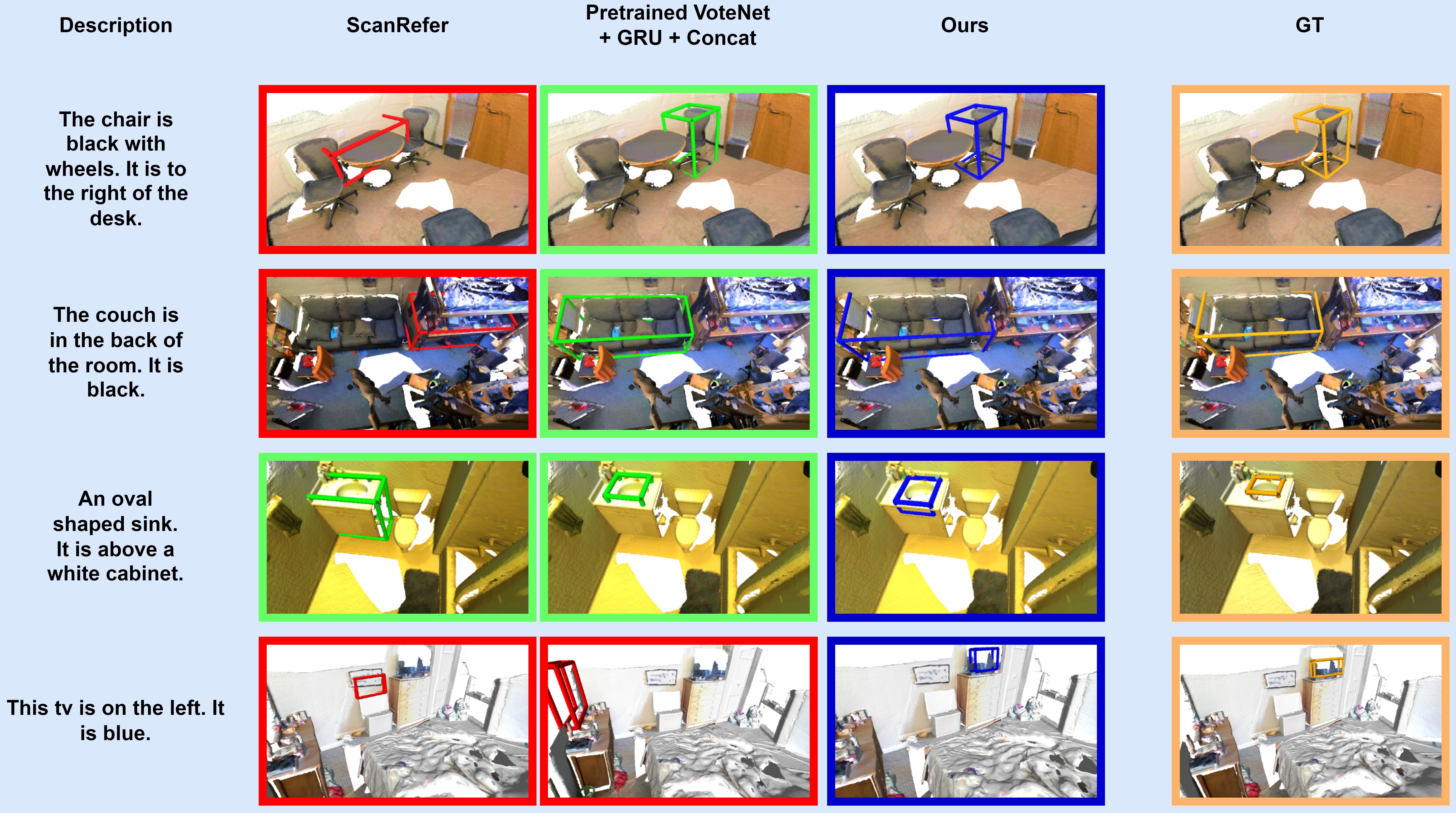

3D visual grounding lies at the intercept of 3D object detection and natural language understanding. This work focuses on developing a transformer architecture for bounding box prediction around a target object that is described by a text description. Transformers are the superior choice for 3D object detection compared to the previously often employed VoteNet as they are capable of capturing the long-range context better and can operate on variable-sized inputs. Therefore, they are well suited to operate on 3D point clouds. We show that employing 3DETR-m as an object detection framework improves the 3D visual grounding performance. Additionally, we introduce a transformer into the matching of the textual and visual features since it is uniquely suited to capture the relevant interdependencies. Our method achieves around 2.5\% improvement over the ScanRefer architecture by replacing the object detection with 3DETR-m and adding a vanilla transformer encoder to the matching module.